What’s the Maximum Server Room Temperature?

Server room temperature is one of the most important metrics in any data center environment. Keeping the equipment inside at a consistent temperature and humidity is a critical part of a facility manager’s job. So what is the maximum server room temperature?

It is essential for data center managers to stay informed of the guidelines they should be following in keeping their facilities in tip-top shape. A disparity of 1°C or 2°C in either direction may not seem like much, but it can make a huge difference in the long run.

What is the maximum temperature a server room can comfortably operate at? What is the minimum? The answers to these questions will give IT managers an idea of the accepted range at which they can keep their facilities.

Server Room Temperature Baseline Standards

The American Society of Heating, Refrigeration and Air-Conditioning Engineers (ASHRAE) is the body that governs the standard for the accepted air temperature and humidity ranges for data center environments. ASHRAE Technical Committee 9.9 determined in 2011 that a class A1 data center should maintain a temperature between 59°F and 89.6°F, as well as the relative humidity of RH 20% to RH 80%. ASHRAE recommends that facilities should not exceed these guidelines.

Servers should be kept between 89.6°F and 59°F.

Evolving Requirements And Possibilities

While ASHRAE’s guidelines are often cited in discussions around maximum server room temperature, it’s important to understand that they include both recommended and allowable operating ranges. This distinction plays a key role in optimizing environmental control strategies.

The recommended range, which is ideal for maintaining long-term equipment reliability and efficiency, is 18°C to 27°C (64.4°F to 80.6°F) for Class A1 data centers. This is the temperature range most data center managers should aim to maintain, as it strikes the right balance between cooling efficiency and hardware protection.

In contrast, the allowable range for Class A1 equipment is broader, from 15°C to 32°C (59°F to 89.6°F). Equipment can technically operate safely within this band for limited periods, but prolonged exposure toward the extremes—especially the higher end—can increase the risk of thermal stress, reduce component lifespan, and void warranties in some cases.

Humidity Controls

Humidity control is just as critical. While the allowable humidity range is 20% to 80% relative humidity (RH), the recommended RH range is narrower—40% to 60%—to prevent issues such as static discharge (if too dry) or condensation (if too humid).

For best results, maintaining conditions within the recommended ranges is advisable. This becomes even more crucial when deploying high-density racks, where internal heat buildup can significantly exceed ambient room conditions. Monitoring intake air temperature at the rack level—such as with AKCP’s thermal map sensors—offers a more accurate picture of actual conditions, enabling proactive cooling management and helping avoid hotspots before they affect equipment.

By understanding the difference between recommended and allowable thresholds, and aligning environmental control strategies accordingly, data center operators can extend hardware lifespan, maintain compliance, and reduce operational risk.

Energy Efficiency of Higher Server Room Temperature

Is the “cooler is better” mentality coming to an end? The industry is investigating how a little tilt of a few degrees might allow IT operations to continue while conserving energy and lowering expenses. Similarly, the temperature of water utilized for cooling is rising.

A view of the server pods in the Microsoft Dublin data center, a design that allows the company to run its servers in temperatures up to 95°F

The server room temperature in most data centers is between 68°F and 72°F, with some as low as 55°F. By decreasing the amount of energy consumed for air conditioning, raising the baseline temperature within the data center can save money. For every degree of upward movement in the setpoint, data center managers are predicted to save 4% in energy expenses. Google, Intel, Sun, and HP are among the corporations praising the benefits of higher data center baseline temperature settings for cost savings.

However, raising the thermostat may result in less time to recover from a cooling failure, and is only recommended for firms that have a thorough awareness of their facility’s cooling circumstances.

Examples of Use Cases

Improved monitoring and airflow control are allowing data center operators to be more aggressive with greater temperatures in real-world circumstances. In other circumstances, increasing the server room temperature allows businesses to run facilities that utilize chillers sparingly or not at all.

Chillers, which are used to chill water, are common in data center cooling systems, but they need a lot of power to run. Many data centers are lowering their reliance on chillers to enhance the energy efficiency of their buildings as the focus on power prices grows.

Can servers, on the other hand, withstand the heat? According to new statistics, servers are significantly more durable than previously thought. Intel experts conducted a groundbreaking study in which they used just air conditioning to operate a test data center environment. The survey discovered that when temperatures were elevated to 80°F, hardware failure rates were substantially lower than predicted. To compensate for a lack of granular control, many data centers lose energy by over-cooling the data center.

Companies have felt more at ease operating servers in warmer areas as a result of this. Even a Middle Eastern data center user and eBay in Phoenix don’t have chillers. Both Google and Microsoft use fresh air conditioning instead of chillers in their facilities.

Raising the Chiller Water’s Temperature

For facilities using chillers, raising the cooling set point in the data center can allow the manager to also raise the temperature of the water in the chillers, reducing the amount of energy required to cool the water and thus saving energy costs. Facebook retooled the cooling system in one of its existing data centers in Santa Clara, California, and trimmed its annual energy bill by $229,000. While many factors were involved, optimizing the cold aisle and server fan speed allowed Facebook to raise the temperature at the CRAH return from 72°F to 81°F. Thus the higher air temperature precipitated a raised temperature of the supply water coming from its chillers, requiring less energy for refrigeration. The temperature of the chiller water supply was raised by 8°, from 44°F to 52°F.

Lawrence Berkley Chill Off 2

The Chill Off 2 unintentionally demonstrated the possibility for Clustered Systems to attain even better efficiency in one research. The temperature of the chilled water utilized in the prototype’s cooling distribution unit (CDU) rose from 44°F to 78°F during testing due to a chiller failure. The CPU temperatures in the Clustered Systems climbed throughout the 46-minute cooling interruption, yet the servers continued to run. “Observations during the use of 78°F (25.5°C) chilled water temperature indicate that the Clustered Systems design potentially can be operated with very low-cost cooling water, providing additional energy savings compared to the test results,” according to the Lawrence Berkeley National Laboratory report on the Clustered Systems technology.

Some people are purposefully raising the temperature of the cooling water. For the Swiss Federal Institute of Technology Zurich, IBM deployed a supercomputer cooled by hot water. The Aquasar cooling system employs water at temperatures up to 140°F, which uses up to 40% less energy than an air-cooled machine. The technology also utilizes waste heat to heat university buildings, substantially lowering Aquasar’s carbon impact.

Humidity Management Strategies for Server Rooms

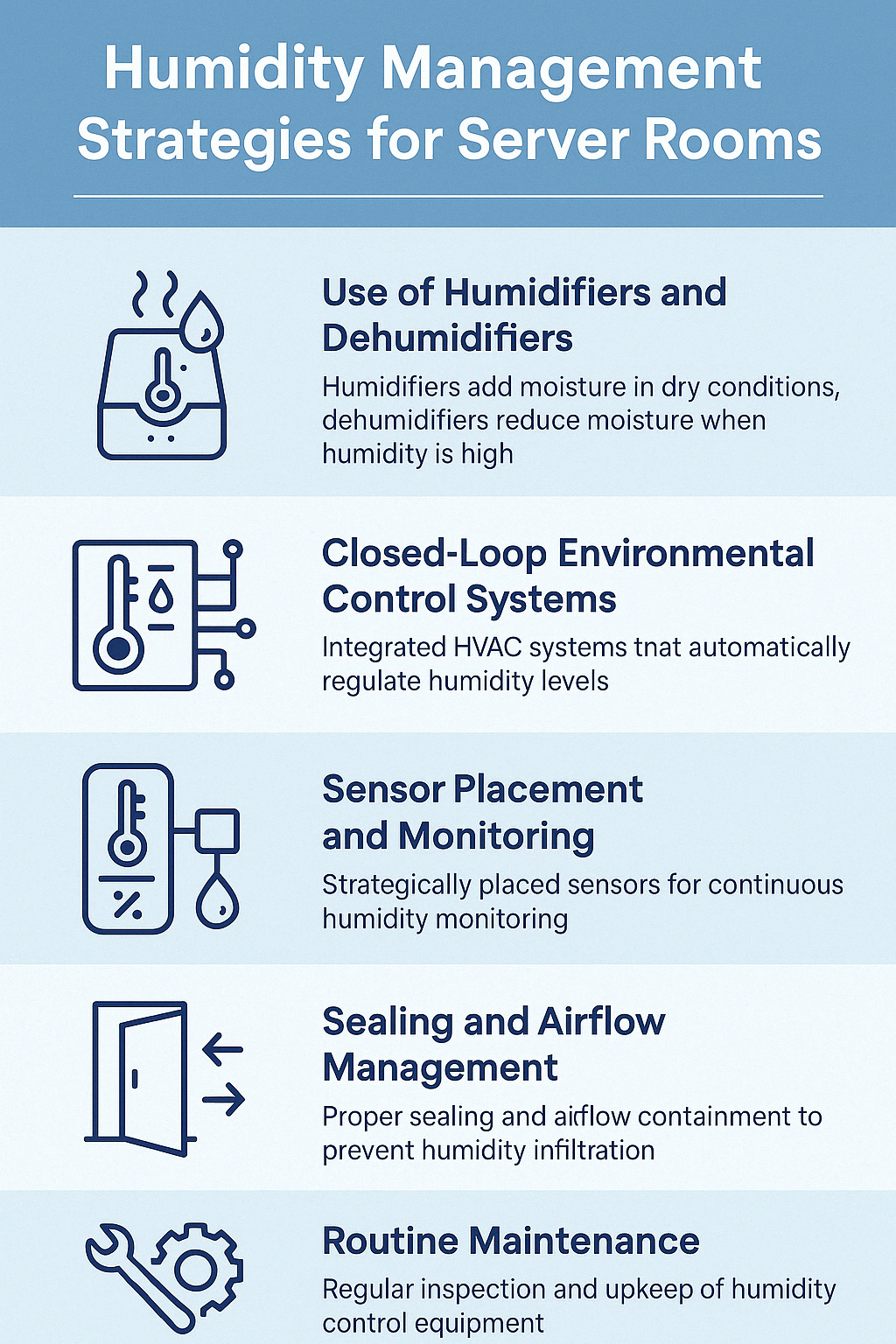

Controlling humidity is just as critical as maintaining temperature in a server room environment. Fluctuations outside the optimal range can lead to condensation, corrosion, or electrostatic discharge—all of which pose serious risks to IT equipment. ASHRAE recommends keeping relative humidity (RH) within 40% to 60%, even though the broader allowable range is 20% to 80%.

To maintain consistent humidity levels, data center operators should adopt a combination of environmental control strategies and proactive monitoring:

1. Use of Humidifiers and Dehumidifiers

Humidifiers are essential in dry climates or during colder months when indoor RH drops significantly. They add moisture to the air, helping avoid static buildup that can damage sensitive electronics.

Dehumidifiers are used in high-humidity environments to prevent condensation, mold growth, and corrosion of circuit boards and metal enclosures.

2. Closed-Loop Environmental Control Systems

Integrated HVAC systems equipped with humidistats can automatically regulate air moisture levels. These systems adjust humidification and dehumidification in real-time based on sensor feedback, ensuring RH stays within the safe zone.

For high-precision environments, dual control of temperature and humidity using PID algorithms can further optimize control performance.

3. Sensor Placement and Monitoring

Strategic placement of humidity sensors throughout the room and inside equipment racks allows for early detection of RH deviations.

AKCP’s sensorProbe+ with dual temperature and humidity sensors enables continuous monitoring at critical points. When paired with AKCPro Server software, historical trend data can be analyzed to identify recurring humidity issues and fine-tune HVAC response.

4. Sealing and Airflow Management

Ensure proper sealing of the server room to prevent infiltration of humid outside air, especially in facilities near coastal or tropical areas.

Implement containment systems (hot aisle/cold aisle) to minimize the mixing of air streams and reduce environmental variability across the data hall.

5. Routine Maintenance

Regular inspection and maintenance of air handling units, humidifiers, and dehumidifiers ensures they operate efficiently and do not themselves become sources of RH imbalance.

Replace or clean filters and check drainage systems to avoid microbial growth, which can increase localized humidity.

Effective humidity control, combined with intelligent monitoring, helps prevent hardware failure, improve system availability, and support a more energy-efficient operation. By staying within ASHRAE’s recommended humidity range and applying targeted strategies, server rooms can achieve a stable and resilient environment.