Datacenter architects are always devising new techniques to improve the efficiency, integration, and scalability of data centers. When creating a data center, however, you frequently have to mix and match switches, servers, storage components, and other gear, making it more difficult to design for greater performance and energy efficiency. The Open Compute Project (OCP) was founded to bring together suppliers from across the world to work on designs, specifications, and lessons gained from data center projects, with the goal of improving computer hardware efficiency, scalability, and versatility.

Open computing is not a new notion. The Open Compute Project, which includes productized computing hardware, servers, data storage components, racks, and network switches, applies the open-source concept to computing hardware and designs, which includes productized computing hardware, servers, data storage components, racks, and network switches. The goal is to make it simpler to produce better technology by collaborating and exchanging designs and ideas in order to create solutions for computer infrastructure that is more efficient, customizable, cost-effective, and interoperable. The market releases OCP hardware designs for open access, allowing manufacturers to construct their own OCP equipment in the same way that software widgets for an open-source software platform like WordPress are created.

Companies who join the OCP get the chance to work with other industry experts to discover novel, freely accessible solutions to computer hardware concerns. The Open Cloud Platform (OCP) is a community where members may discuss solutions, showcase concepts, and collaborate on breakthroughs that will impact the IT industry and the data center ecosystem. OCP also acts as a marketplace where members may purchase open-source hardware built according to OCP criteria and ideals.

Participating in the Open Compute Project (OCP)

The Open Compute Project (OCP) was founded in 2011 by the creators of Sun Microsystems, Goldman Sachs, and Andy Bechtolsheim. It started when Facebook constructed its data center in Pineville, Oregon. This data center was 38% more energy-efficient, highly scalable, and 24% less costly. Facebook then opted to share its architecture with Intel and Rackspace.

Anyone can contribute hardware or software to the Open Compute Project Community, following the ethos of open cooperation, as long as the contributions fit three of the four core OCP tenets:

- Efficiency – Design efficiency encompasses a wide range of factors, including power consumption, cooling, performance, latency, and prices.

- Scalability – Because data center scalability is critical, all OCP contributions must be scalable, as defined by remote maintenance, servicing, upgradeability, error reporting, and so on, and each contribution must be well documented.

- Openness – Every contribution must be open so that others can build on it. This means that additions should adhere to current interfaces and be based on OCP contributions.

- Impact – Contributions to the OCP community should also have a beneficial impact by introducing new efficiency improvements, new technologies, improved scalability, a new supply chain, and so on.

Design Principles in the Open Compute Project

The Open Compute Project aims to capture the best principles in datacenter design and open them for third-party implementation and discussion. This paper summarizes them in the areas of electrical, thermal, building, and server design.

-

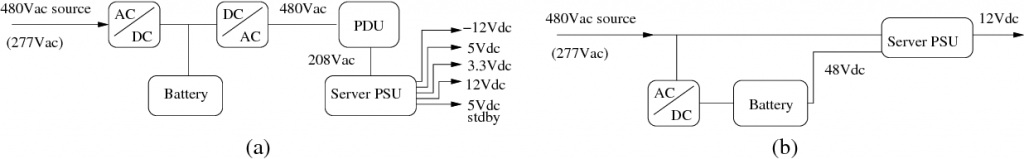

Electrical Design Principles

Photo Credit: www.semanticscholar.org

One of the most critical decisions made by the Open Compute Project (OCP) was to use the original 277VAC from the distribution station rather than the usual data center power distribution of 208VAC. This decision was made primarily to decrease wasteful power conversions and associated equipment. However, it necessitated and permitted significant modifications in other electrical design decisions. For example, we chose a distributed offline backup power strategy to a standard inline centralized UPS for backup power. Because they demand less electricity while fully charged, offline batteries decreased transformation waste. They did, however, demand a new power supply unit (PSU) in the servers, which could handle both 277VAC and 48VDC in backup mode. We had to build our own specs because such PSUs were only available for lower-power applications at the time. However, by designing our own, we were able to achieve high efficiency of over 94% across most load inputs while lowering component costs. The remote power panels were another part of the power distribution that we designed (RPP). The RPP at each of these rows provides power without transformation and with low loss because most rows in our data center feature our own 277VAC computers and battery cabinets. However, we have a few rows where the RPP converts power down to 208VAC to allow for the rare usage of vintage or commodity equipment.

-

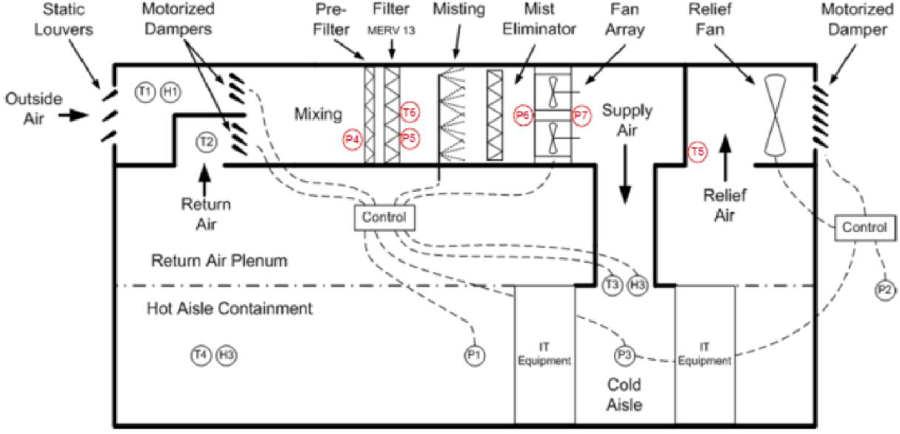

Thermal Design Principles

Photo Credit: citeseerx.ist.psu.edu

The utilization of 100% outside air economization was the driving idea in constructing the data center building’s thermal management. Outside-air cooling has considerable energy savings over chillers, but it limits the data center’s location options because it operates best with chilly, dry air. One of the reasons we picked Prineville, Oregon as the site for our first data center was because of this limitation. Allowing the temperature and moisture restrictions in the data center to exceed ASHRAE’s most liberal guidelines, as well as the excellent power efficiency of our servers, which create less heat, were two additional crucial considerations in our cooling plan. We increased the efficiency of the data center by enclosing the hot aisles and using a drop-ceiling plenum for air return, which eliminates the hot and cold air mixing that occurs in standard datacenters without containment.

-

Building Design Principles

Photo Credit: prnewswire.com

The data center building requires no ducting or cooling pipelines for the data hall as a result of the thermal design mentioned in the preceding section, obviating the necessity for access floors. As a result, we were able to use concrete slab-on-grade to tile the data center flooring. This option saves money while also providing a larger bearing capacity and allowing for greater construction height. We were able to avoid static dissipation flooring because we can manage the humidity in the building and maintain it high enough.

Instead of standard corrugated metal, the ceiling employs lightweight composite deck concrete, which increases the diaphragm load and protects the environment. With strategically placed diagonal bracing, the stronger ceiling allowed structural adjustments that resulted in greater open space. For lower power usage and cabling, the lighting system use power-over-ethernet. Each fixture also has diagnostics, such as temperature for efficient cooling, as well as brightness, bulb life, and occupancy sensors for automated output adjustment.

The weight-bearing columns in the building are oriented behind the battery cabinets, decreasing resistance to airflow behind the servers that create the heat because we employ custom-sized racks with battery cabinets in between at defined intervals. For improved airflow and to give it its own, more lenient environmental criteria, we detached the office space as an attachment to the computing area.

-

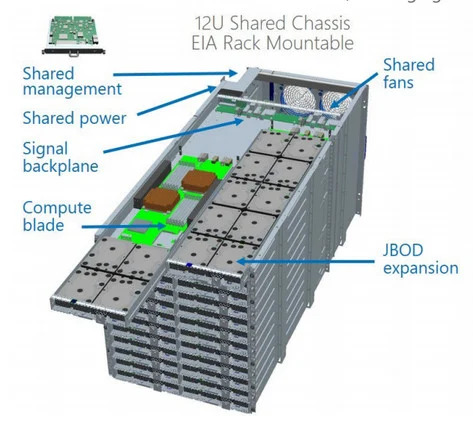

Server Design Principles

Photo Credit: www.theregister.com

The server design is one area where we deviated significantly from commodity solutions. Not only are our servers’ dimensions designed for cooling, but they also include a bespoke motherboard, power supply, and mechanical design.

The three main design principles were:

-

- Prefer efficiency over aesthetics.

- Optimize for high-impact use cases instead of the generality of application.

- Maximize serviceability and limit it to the front of the server.

The following examples show how we applied these principles, with more details provided in previous work.

- We created high-efficiency power supplies with inputs of 277VAC and 48VDC and a single 12VDC output. The PSU includes particular features to provide a smooth starting and transfer from regular to backup power. While maintaining cost competitiveness with commodity PSUs, this PSU achieves a power efficiency improvement of 13% to 25%.

- The motherboards were created with enhanced efficiency, lower costs, and better ventilation in mind. We deleted any unnecessary components, such as BMC, SAS controllers, serial and graphic outputs, that are not utilized in our applications but are given by commodity suppliers to meet the flexibility requirements of a vast market. Instead, we offered low-cost, low-power options for managing the servers.

- The twin CPUs’ side-by-side motherboard arrangement, together with a custom-sized chassis (1.5U height), high-efficiency fans, and an HDD at the rear, enable ideal airflow and cooling, resulting in significantly enhanced cooling efficiency while using less electricity than commodity servers.

- No resources were spent on appearance—no stickers, paint, plastic bezels, or faceplates—resulting in a “vanity-free” utilitarian design. Almost all of the components were created to be serviced quickly and easily without the need for screws.

- Each rack is divided into three columns, each with 30 servers and two 48-port switches. As a result, some of the rack’s cost is amortized, and the server-to-switch ratio is improved. The switches are housed in a bespoke quick-release tray that can hold any 19″/1U equipment.

- All cables, including network and power, are accessible from the front and have the shortest length necessary to match the pitch of the server to which they are connected. This design saves money and space while also making service easier.

AKCP Monitoring Solution

With over 30 years of experience in data center monitoring AKCP is the world’s leader in SNMP-based Data Center Monitoring Solutions. As per the Open Compute Project Community stance, our organization’s commitment to the industry is to deliver reliable and efficient, flexible, and scalable products and services.

AKCPro Server Central Monitoring Software

Remote Monitoring With AKCP Wireless Monitoring

Whether you are looking for a few temperature and humidity sensors for your computer room or rolling out a multi cabinet monitoring solution, AKCP has an end-to-end data center monitoring solution including sensors and AKCPro Server DCIM software. Our Rack+ solution is an integrated intelligent rack or aisle containment system. Pressure differential sensors check proper air pressure gradients between hot and cold aisles. RFID Cabinet locks secure your IT infrastructure.

AKCP provides both traditional wired and wireless data center monitoring solutions. Our Wireless Tunnel™ System builds upon LoRa™ technology, with specific features designed to meet the needs of data center monitoring. Wireless sensors give rapid deployment, easy

installation, and a high level of security. It is the only LoRa based radio solution that has been designed specifically for critical infrastructure monitoring, with instant notifications and on sensor threshold level checking.

AKCP Monitoring Solutions Helps in Monitoring the following:

Cold Aisle

Place dual temperature and humidity sensor in your cold aisle to check the aisle temperature is not too hot or wasting energy by being too cold.

Cabinet Hotspots

Monitor temperature at the front and rear, top middle and bottom of the IT cabinet, as well as the temperature differential from the front to rear, T value.

Differential Pressure

Monitor for proper pressure differentials to check adequate airflow from cold to the hot aisle. Run more efficiently with correct pressure differential to prevent back pressure and hot air mixing back to the cold aisle.

Water leaks, access control, and sensor status lights

The addition of rope water sensors underneath raised access flooring, rack level, and aisle access control, and sensor status lights complete the intelligent containment monitoring system.

Conclusion

It is not always possible to go the bespoke approach. However, when the size warrants it, there are a plethora of data center design options that may result in considerable CAPEX and OPEX savings. In comparison to a standard data center with the same computing capacity, the concepts outlined here resulted in a data center that is 24% less expensive to equip and requires 38% less electricity to run. Furthermore, the average power use effectiveness (PUE) observed during the first year of operation was 1.07, possibly the lowest sustained PUE ever recorded.

Reference Links:

https://softwareengineeringdaily.com/2017/08/14/open-compute-project-with-steve-helvie/

https://www.opencompute.org/about

https://info.pcxcorp.com/blog/what-is-the-open-compute-project-ocp-and-how-is-it-revolutionizing-the-it-industry