Cooling Towers like this are common for the high cooling requirements of Data Centers.

Data Centers and Free Air Cooling

Artificial Intelligence (AI), gaming, high-performance computing, 3D graphics, and the Internet of Things (IoT) all require faster and more complex computing power. The rapidly expanding cloud business, along with the growth of “edge computing,” and the competition between providers, is creating a need for more data center space. Increasing also is demanding for more computing cores per square foot. The power consumption of these data centers generates more heat, from 100 W to 130 W-plus, than five years ago. New processors are now emitting 200 W to 600 W, in the last two years. IDC reports that annual energy consumption per server is growing by 9 percent globally. Even as growth in performance pushes the demand for energy further up.

Air Cooled Data Centers

Air-cooled systems work very well with processors that generate up to 130 W of heat. When stretched to the limit they can accommodate processors of 200 W. Above 200 W, processors need to be cooled by air using larger size enclosures, thus wasting, rather than saving, rack space. Direct cooling to the chips is the only option compatible with the use of high-power processors, keeping the enclosure size small and the density high.

Two common designs for liquid cooling are direct-to-chip cold plates, or evaporators, and immersion cooling. Direct-to-chip cold plates are placed atop the board’s processors to remove heat. Cold plates are categorized into two main groups: single-phase and dual-phase evaporators. Single-phase cold plates use cold water, which is inside the cold plate, to remove heat. With dual-phase evaporators, a low-pressure dielectric liquid flows into the evaporators. As heat generated by the components boils the liquid, the heat is released as vapor. The hot vapor is transferred into a heat rejection coil that uses chilled water, which is looped back to the cooling plant, or via free-airflow to bring the heat to the outside.

Immersion Cooling for Servers

Immersion cooling contains a large bath of dielectric fluid that submerges the full hardware into the leak-proof bath. The fluid absorbs the heat and sometimes turns to vapor, which is cooled or condensed, and recycled back through the system as fluid.

Whether the cooling method is air-cooled or liquid-based cooling, it is essential to monitor server temperatures. In all of these cases, constant temperature monitoring of the servers and the servers’ components are needed to ensure healthy and efficient operations.

Free Air Cooling

Free-air cooling is a cost-effective and cheaper way of using low external air temperatures to cool server rooms. With the recent expansion of the American Society of Heating, Refrigerating and Air-Conditioning Engineers’ (ASHRAE’s) guidelines for acceptable data center operating temperature and humidity ranges, free-air cooling is the best practice by many operators

The operators answered by using the following approaches:

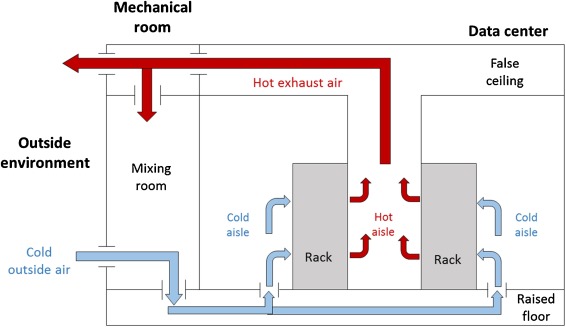

Indirect air: Outside air is drawn in by a heat exchanger that separates the air inside the data center from the cooler outside air. This method prevents particulates from entering the white space, while controlling humidity levels.

Direct air: Outside air passes through an evaporative cooler and is then flowed via filters to the data center cold aisle. When the outside temperature becomes too cold, it blends the outside air with exhaust air to achieve the desired temperature for the facility.

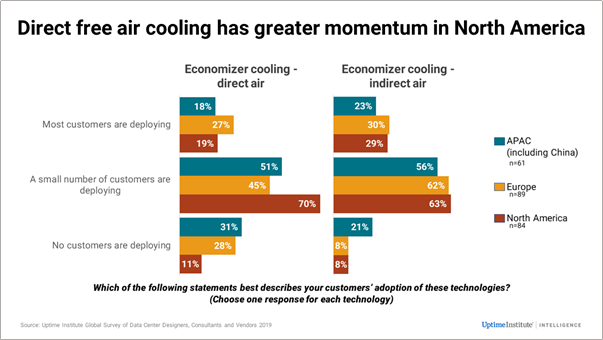

The survey showed that free-air cooling economization projects are gaining in popularity, with indirect free-air cooling being slightly more preferred than direct air. 84% of the cooling system vendors said that at least some of their customers were deploying indirect air cooling (74% for direct air). Only 16% of the vendors said that none of their customers were using indirect free-air cooling (26% for direct air), as shown in the figure below.

Increasing cost-efficiency, as well as increased awareness and interest in environmental impact, will be the driving forces for free-air cooling. Compared to other old-style mechanical cooling systems, free-air cooling requires less upfront capital investment and has lower operational expenses, while reducing the data center’s carbon footprint, thus lowering environmental impacts.

As with many innovations, it will require a complete retrofit for the present data center structure. This will incur some initial investment.

Some issues hampering the installation of free-air cooling will likely continue in the short term. These include the upfront retrofit investment required for existing facilities; humidity and air quality constraints; lack of reliable weather predictions in some areas; and restrictive service level agreements.

Several trends are coming together in the industry to adopt new data center cooling strategies that can save on up-front costs for cooling systems, while also providing significant cost-saving measures over the lifespan of the data center.

Trends Driving Changes in Data Center Cooling

The trend for using free-air cooling systems began from a recommendation from the American Society of Heating, Refrigeration, and Air conditioning Engineers (ASHRAE) years ago. It stated that companies should raise the operating temperature of their data centers to 27°C (80°F). Going to free-air cooling would save money, since 40% of data center power usage was used on cooling systems.

Another trending change is that data centers are becoming both larger and smaller. Large businesses and cloud service providers are investing in big centralized data centers to service the demand for the rapid growth in connected devices, mainly by the Internet of Things technology. Some companies, on the other hand, are moving to small edge data centers, also mainly by IoT applications and digital transition that require localized computing power.

Then there’s the Kigali Amendment, an agreement negotiated in 2016 by 170 countries to reduce the use of hydrofluorocarbons (HFCs) in refrigerators and cooling systems. It went into effect on Jan. 1, 2019, and has so far been ratified by 81 parties (countries and regional organizations, including the European Union). It encourages the research and use of alternative forms of refrigerants in cooling systems, especially those in data centers.

Reinventing Data Center Cooling Systems

With these innovations in mind, and with recent innovations in data center cooling technology, building data center cooling systems today reduces both upfront capital costs (CapEx) and ongoing operational expenses (OpEx).

Free-air cooling systems can save around 30% on OpEx costs. Another advantage is that they can also provide huge savings on CapEx. The reason for this is because the required modular free-air cooling modules/devices are designed with smaller compressors.

Another option is represented by adiabatic cooling systems, which use water for cooling data centers and help reduce reliance on HFCs. Adiabatic systems were not always an option for all data centers because they can only be used where water was readily available and inexpensive.

Newer adiabatic technologic systems, especially with the latest chillers, can operate in adiabatic mode even when water is not available. This way, companies don’t have to decide to choose between adiabatic and non-adiabatic cooling. The same system will support both, in all conditions.

Data Center Cooling Solutions, from the Edge to the Cloud

For cloud and service providers, the latest data centers use both air economizers and adiabatic chillers that minimize capital expenses and optimize operative expense savings. The newest adiabatic chillers are available with or without an embedded free-air cooling system. They are designed to operate at extremely high energy efficiency levels. These solutions give a hand to the latest data center design temperatures and water availability as saving boosters.

During the past 10 to 15 years, the data center power densities of server racks have remained stable at 3 to 5 kW. Air-cooled data centers using chillers and computer room air conditioning (CRAC) units were enough to overcome the heat generated by the servers, keeping the facilities, and the CPUs, below their maximum temperatures. This was possible as the CPUs did not produce more than 130 W of heat.

Data centers utilized raised floor systems with hot aisles and cold aisles as the main cooling method. Cold air from the CRAC and computer room air handler (CRAH) units was distributed to the space below the raised floor, and then through perforated tiles. It went to the main space in front of the servers. This method is simple and has been used for years, although improved cooling methods have gradually taken over, it is still preferred by most data centers.

In recent years, when rack power densities increased, generating heat up to 10 kW or more, air-cooled designs developed hot and cold aisle containment layouts, delivering significant energy savings. The concept behind these methods is to separate the cool server intake air from the heated server exhaust air with a physical barrier, thus preventing the air from mixing. Another method for air-based cooling is in-rack heat extraction. With this method, hot air is removed by having compressors and chillers built into the racks.

In 2018, rack densities continued to increase, approaching 20 kW, pushing the air-cooled systems to their maximum economic capabilities. As rack densities continue to grow, and by some estimates, reach as high as 100 kW per rack, direct on-chip liquid cooling becomes a viable solution.

The Future of Data Center Cooling is Now

Many innovations from different companies promise significant changes in the design of data center cooling, from using sea or rainwater to reduce precious natural resource usage, to optimizing AI to determine how data centers are working and adjust cooling accordingly in real-time, to automated robots that monitor the temperature and humidity of the servers in the rack.

The future of optimizing data center cooling technology is now. The thermal design of traditional data centers is becoming obsolete as high-density computing environments present even greater challenges for cooling, because of the heat produced by continuous processing. If a data center manager does not have accurate knowledge about actual device power consumption, this may lead IT staff to overcompensate, and drive energy usage beyond the levels required for maintaining safe cooling margins.

It’s a good thing there are data center management solutions that optimize data-driven decision-making for more precise operational control by providing visibility on power, thermal consumption, server health, and utilization. Using a data center management cooling analysis, IT staff can lower cooling costs by raising the temperature of the room, thereby improving power usage effectiveness (PUE) and energy efficiency, while monitoring hardware for temperature issues.

When a data center manager responsible for a high-density computing environment is given the necessary capability to raise the overall set-point temperatures of the room, she can significantly lower annual cooling costs across the organization’s entire data center.

Conclusions

Datacenter managers are faced with several global challenges. These challenges include: protecting a rapidly expanding cache of data and an increasing number of mission-critical applications; managing any number of remote locations; and implementing pressing sustainability initiatives, which must be balanced against rising energy costs.

To face these challenges, data center management tools not only provide real-time monitoring of the environment with a high degree of detail, but these software solutions also provide analytic thermal data that can identify temperature issues before they crash the operations of the data center. What’s more, monitoring and combining real-time power and thermal consumption data helps IT staff, to analyze and manage data center capacity effectively, so that power and cooling systems are using energy more efficiently.

Reference links:

https://blog.se.com/datacenter/2019/12/03/global-trends-data-center-cooling-long-term-cost-savings/

https://datacenterfrontier.com/history-future-data-center-cooling/

https://journal.uptimeinstitute.com/data-center-free-air-cooling-trends/