Data centers consume a lot of energy to process information. Hardware capable of calculating billions of bits of information generates a huge amount of heat during those calculations. Excessive heat slows down the operation of data centers. To dissipate that heat, a cooling system is embedded in each facility to ensure constant processing speed. This article will examine the data centre cooling technologies available.

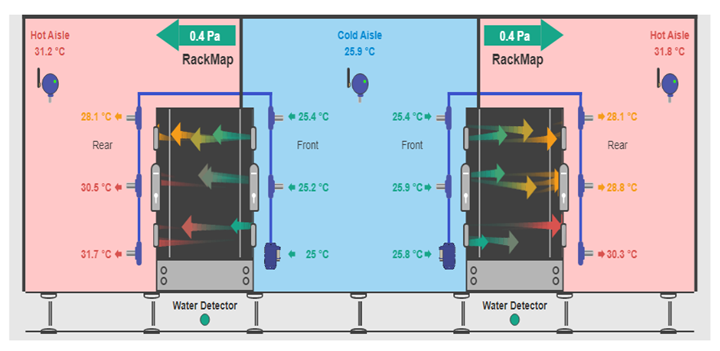

Monitoring hot aisle containment using AKCP thermal map sensors with differential air pressure measurements. Visualization through AKCPro Server Monitoring Software

The purpose of data center cooling technology is to maintain environmental settings conducive for information technology equipment (ITE) operations. Dissipating the heat and transferring that heat to the outside is the primary challenge. In all data centers, the managers must ensure that’s the cooling systems operate continuously and reliably.

As with designing any system, a data center cooling system should be energy-efficient and cost-saving to be effective. Data centers are essentially big energy consumers. Cooling systems use as much, or even more, energy than the computers they support. It’s advisable to build a well-designed and operated cooling system that uses only a small fraction of the energy used by ITE.

The Economic Meltdown of Moore’s Law

Since the mid-2000s, as computing technology became faster and more powerful, designers and operators were concerned about the efficiency of air-cooling technologies to cope with the increasing energy-draining servers. With power requirements approaching or exceeding 5 kilowatts (kW) per cabinet, some data centers have to resort to technologies such as rear-door heat exchanger and other kinds of in-row cooling to keep the servers cool.

In the Economic Meltdown of Moore’s Law Ken Brill of the Uptime Institute predicted in 2007 that the heat from fitting more and more transistors onto a chip would result in reaching a point where it would not be feasible to cool the data center without radically revising current cooling technology.

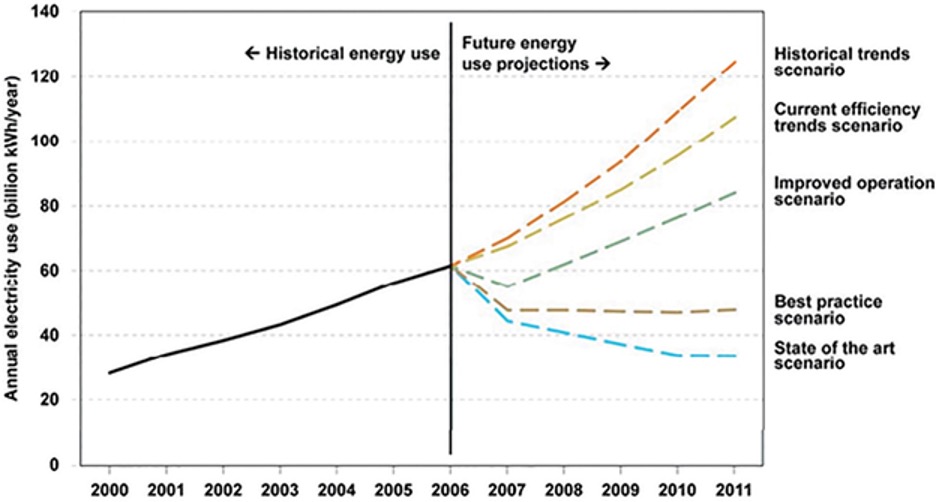

The concern was sufficiently significant for the U.S. Congress to begin investigating. Congress was aware of data centers and the amount of energy they required. It ordered the U.S. Environmental Protection Agency (EPA) to make a study and report on data center energy consumption (Public Law 109-341). This law also ordered the EPA to create efficiency strategies and drive the market for efficiency. This study predicted vastly increasing energy use by data centers unless measures were taken to significantly increase efficiency.

Moore’s Law hasn’t yet happened, but once it does, the result will be limitations in the design of chips and transistors to preventing overheating. This will affect the efficiency of the next generation of data centers.

Legacy Cooling and the End of Raised Floor

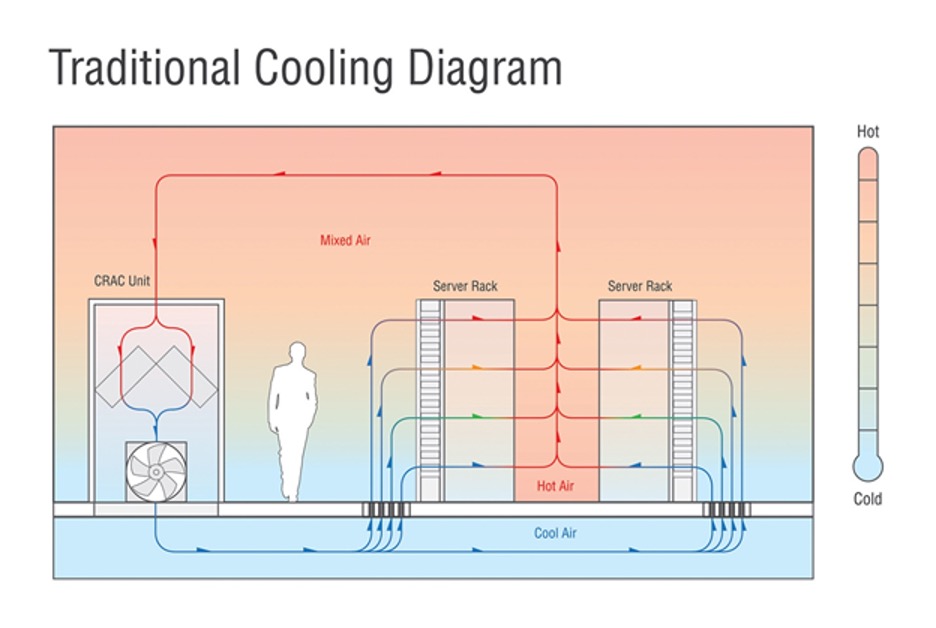

Previously, computer rooms and data centers have employed raised floor systems to deliver cooling air to servers. Cold air from a computer room air conditioner (CRAC) or computer room air handler (CRAH) supplies cool air below the raised floor. Perforated tiles provided a means for the cold air to leave the space between the floors and enter the main space, usually in front of server intakes. Once circulated through the servers, the heated air returned to the CRAC/CRAH to be cooled, mixing with the cold air. The CRAC unit fans ran at a steady speed, and the CRAC had a humidifier within the unit that produced steam. The primary purpose of a raised floor was to deliver cold air where it was needed, with very little effort, by simply changing a solid tile for a perforated tile.

That common design system for computer rooms and data centers is still being used today. The legacy system depends on simply delivering a relatively small quantity of conditioned air and letting that small volume of conditioned air mix with the room air in the space to reach the set temperature. This system worked perfectly when ITE densities were low. Low densities allowed the system to meet its primary goal, despite shortcomings of poor efficiency, and uneven cooling.

That common design system for computer rooms and data centers is still being used today. The legacy system depends on simply delivering a relatively small quantity of conditioned air and letting that small volume of conditioned air mix with the room air in the space to reach the set temperature. This system worked perfectly when ITE densities were low. Low densities allowed the system to meet its primary goal, despite shortcomings of poor efficiency, and uneven cooling.

With that in mind, modern data centers are no longer utilizing raised floor technology, because there are now improved air delivery techniques.

When is it Cold Enough?

If a server gets overheated, onboard logic will turn it off to avoid damaging the server. If a server is kept too hot, but not enough to turn itself off, its operations could be limited. Given the fact that technology changes as often as every three years, ITE operators should consider how relevant reductions in server efficiency is to their operations. It may depend on the situation. In a homogenous environment, with a change rate of four years or less, the failure rate of increased temperatures may not be enough to invest in better cooling designs, especially if the manufacturer will guarantee the ITE will still work at higher temperatures. In a mixed environment, with equipment of longer expected life spans, temperatures may need to be checked more frequently.

Cooling Process

The cooling process involves these steps:

- Server Cooling. Eliminating heat from ITE

- Space Cooling. Removing heat from the containment of the ITE

- Heat Rejection. Rejecting the heat to a heat trap located outside of the data center

- Fluid Conditioning. Circulating cooling fluid to the white space, to maintain temperature conditions within the space.

Server Cooling

ITE generates heat as all of its components use electricity. It’s just basic physics: the energy in the incoming electricity is conserved. When a server uses electricity, the server’s components change the energy from electricity to heat.

Heat transfers from the solid components, to a fluid (usually air) within the server, often via another solid (heat sinks). Internal fans draw air across the internal components, hastening the heat transfer.

Some systems make use of other fluids to cool and carry heat away from the servers. In this way, the cooling fluids remove the heat more efficiently than air.

- Liquid contacting a heat sink. As liquid flows through a server and comes in contact with a heat sink within the ITE, heat is absorbed and transferred away from the components.

- Immersion cooling. ITE components are sunk into in a non-conductive liquid which absorbs the heat and flows away from the components.

- Dielectric fluid with state change. The ITE components are washed with a non-conductive liquid. The liquid changes to a gaseous state and takes heat away to another heat exchanger, where the fluid expels the heat and changes state back into a liquid.

Space Cooling

In most data center designs, air from servers which are heated blends in with room air and eventually makes its way back to a CRAC/CRAH unit. The air releases its heat, via a coil, to coolant within the CRAC/CRAH. In the case of a CRAC, the liquid is a refrigerant. In the case of a CRAH, it’s chilled water. The refrigerant or chilled water removes the heat from the space. The discharged air coming out of the CRAC/CRAH often has a temperature range of 13-15.5°C (55-60°F). The CRAC/CRAH blows air into a raised floor platform using fans. The standard CRAC/CRAH system from many manufacturers and designers controls the unit’s cooling based on circulating air temperature.

Layout and Heat Rejection Options

While raised floor free cooling is compatible in low-density spaces, it may not be enough to meet the demands of increasing heat density and efficiency when ITE is a more powerful and faster calculating system.

Operators have begun using practices and technologies consisting of Hot Aisles and Cold Aisles, ceiling return plenums, raised floor management, and server blanking panels to improve cooling performance in raised floor environments. These methods are definitely better than raised platforms alone.

To improve the cooling system, design engineers began to experiment with containment. The concept is straight forward. Erect a physical barrier to separate cool server intake air from heated server exhaust air. Preventing cool supply air and heated exhaust air from mixing has a number of benefits, including:

- More consistent inlet air temperatures

- The temperature of the air going to the white space can be raised, improving options for efficiency.

- The air temperature returning to the coil is higher, which typically makes it operate more efficiently.

- Space can operate with higher density equipment.

Typically, in a closed environment, air leaves the handling equipment at a temperature and humidity compatible with ITE operation. The air goes through the ITE only once, then returns to the air handling equipment for conditioning.

Hot Aisle Containment compared to Cold Aisle Containment

In a Cold Aisle containment system, cool air from the cooling system is contained, while hot server exhaust air is circulated freely away from the system. In a Hot Aisle containment system, hot exhaust air is flowed upwards and returns to the cooling exchanger, usually via fan power. AKCP has sensors designed specifically for hot and cold aisle containment monitoring. These include cabinet thermal maps, differential air pressure sensors and the cabinet analysis sensor.

Cold Aisle containment is compatible with a raised floor retrofit, especially if there is no ceiling air circulation. Its advantage is to leave the cabinets more or less as they are, as long as they are in a Cold Aisle/Hot Aisle containment setup. The contractor can build the containment system around the present Cold Aisles.

Cold Aisle containment is compatible with a raised floor retrofit, especially if there is no ceiling air circulation. Its advantage is to leave the cabinets more or less as they are, as long as they are in a Cold Aisle/Hot Aisle containment setup. The contractor can build the containment system around the present Cold Aisles.

Since most Cold Aisle containment systems are used to accommodate the raised floor, it can also use Cold Aisle containment with another air-cooling system, such as overhead ducting.

In all, Cold Aisle containment is preferable by the designer and operator in the layout of ITE cabinets in which the loading of the ITE does not change much, nor vary widely.

In a controlled Hot Aisle containment environment, the ITE hardware determines how much cool air is required. The cooling system fills the room with temperate air. As air is transferred from the cool side of the room by the server fans, the differential pressure in the room will quickly transfer the air to the warmer side.

In order to make it work, the server room should have a large, open ceiling plenum, with clear returns to the air-cooling equipment. It is more convenient to have a large, open ceiling plenum than a large, open raised floor, because the ceiling plenum does not have the burden of supporting the server cabinets. The air circulators remove warm air from the ceiling return plenum.

Hot Aisle containment systems can deliver a higher volume of conditioned air compared to that of Cold Aisle Containment. In a Cold Aisle containment system, the amount of air in a data center at any given time is the same in the supply plenum and in the contained Cold Aisles. In a Hot Aisle containment system, the room is filled with hot air that is limited to the air inside the Hot Aisle containment and the ceiling return plenum.

Hot Aisle containment gives operators the option to remove the raised floor from the design. Temperate air fills up the room, often from the outside. The containment prevents contamination, so air does not have to flow in front of the ITE. Removing the raised floor reduces initial costs.

Other Cooling methods

There are other ways of removing heat from the server rooms, including in-row and in-cabinet positions. For example, rear-door heat exchanger take heat from servers and remove it via cooling liquid.

In-row cooling devices are placed near the servers, typically something placed in a row of ITE cabinets. There are also coolants that are located above the server cabinets. These close-coupled cooling systems minimize fan energy required to move the air.

Heat Rejection

After heat is removed from white space, it must be dissipated into a heat sink. The most readily available heat sink is the atmosphere. Other options include a reservoir of water or the ground itself.

There are many techniques for transferring data center heat to its final heat sink. Among those are:

- CRAH units with water-cooled chillers and cooling towers

- CRAH units with air-cooled chillers

- Split system CRAC units

- CRAC units with cooling towers or fluid coolers

- Pumped liquid (e.g., from in-row cooling) and cooling towers

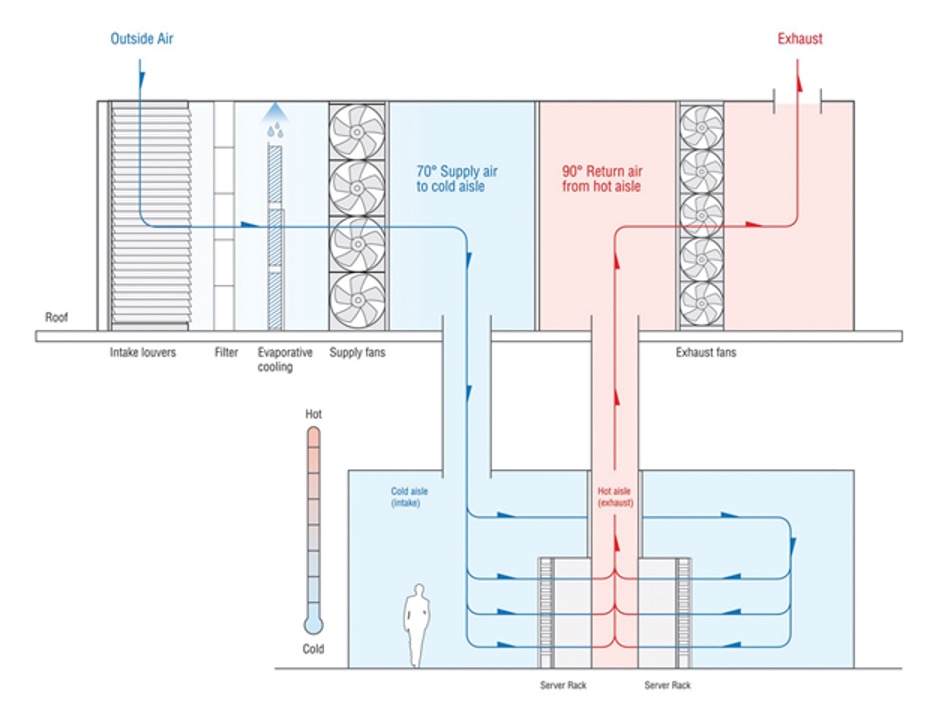

- Airside economization

- Airside economization with direct evaporative cooling (DEC)

- Indirect evaporative cooling (IDEC)

Economizer Cooling

Cooling systems have some form of a refrigerant-based thermodynamic cycle to obtain the prescribed environmental conditions. Economization is cooling in which the cooling cycle is turned off, sometimes partially, or all of the time.

Airside economizers suck outside air into the data center, which is often blended with return air to get the right temperature as it enters the data center. IDEC is an alternative of this where the outside air does not enter the data center, but dissipates the heat from the inside air via a heat exchanger.

Evaporative cooling (either direct or indirect) methods use evaporated water to help the economizer to cool. The water absorbs the energy, setting the dry bulb temperature to a point where it approaches the wet bulb (saturated) temperature of the air.

In waterside economizer systems, the cooling cycle is not needed when outside conditions are cold enough to achieve the desired chilled water temperature. The chilled water passes through a heat exchanger and carries the heat to the condenser water loop.

In waterside economizer systems, the cooling cycle is not needed when outside conditions are cold enough to achieve the desired chilled water temperature. The chilled water passes through a heat exchanger and carries the heat to the condenser water loop.

Design Criteria

To design a cooling system, the team must agree to a certain standard.

Heat load, often measured in kilowatts, should be the prime issue in designing the cooling system.

It includes two factors: The total heat to remove and its density. Data centers usually measure heat density in watts per square foot. Many speculate that density should actually be measured in kilowatts per cabinet, which is practical where one knows the number of cabinets to be deployed.

The owner and designer must determine the goals of the data center and the design mechanical, electrical, and controls required to achieve those goals. When designing the systems, the team must have access to the building. Once the team designs the system, it adds in redundant equipment, and then maybe adds a little more. The constructed systems can be highly stable if the design and its operation are sound.

It is worth noting data centers do not usually operate at a designated load. At times in a data center’s lifespan, it may operate in a lightly loaded state. Operators and designers should take this into consideration to make the data center more efficient, even when taking the maximum load.

Conclusion

Not all data centers have the same cooling technology. Some of them are located in a cooler region where the need for refrigerated systems is reduced.

With technology in data centers becoming more powerful and efficient, the raised platform method of cooling servers may not be enough to keep them operating. More operators are turning to use liquids such as a vast storage of water to help in the cooling methods. Until there is broad adoption of liquid-cooled servers, the primary method would be in optimizing air-cooled, contained data centers.

Learn more here about how AKCP ca help monitor your data center environment.

Reference links:

https://journal.uptimeinstitute.com/a-look-at-data-center-cooling-technologies/