Like any other machine, IT equipment in data centers generates waste. In this case, heat is generated as a byproduct of the power consumed. This heat must be removed for data center systems to function properly. Therefore, cooling is a vital component in data centers.

CRAC and CRAH units are employed in conventional data centers to cool the facility. These cooling systems use chilled air that is transported through ducts. The chilled air may also be delivered via raised floor systems. But these conventional systems fail to consider a few things. For one, it does not consider the location of IT equipment. As well as the distribution power among different IT workloads.

Hence, CRAC and CRAH cooling systems can be inefficient in data centers. These systems may lead to “hotspots” which can harm delicate IT equipment. Operators often oversupply cooling capacity in hopes to avert performance issues due to heat. However, this leads to further overconsumption of energy.

In addition to heat, humidity poses another threat to data centers’ cooling systems. However, small to midsize data centers are still operating on site. These cooling tips will help prevent your cooling systems from overheating.

Cooling Tips for Data Center Efficiency

There are many cooling tips to improve data center efficiency. But there are several essential cooling tips that every operator should remember. That includes monitoring temperatures and eliminating hot spots. As well as installing adequate equipment and optimizing data center design.

Monitor Cooling Systems

Photo Credit: www.upsite.com

The optimal operation for cooling systems varies in different data centers. Typically, it depends on the geographic location and size, equipment, and power density. Cooling requirements also change when equipment is installed, replaced, or moved.

Using DCIM systems can help ensure proper cooling for data centers. DCIM can carry out several functions. One of which is real-time monitoring of energy consumption of IT equipment as well as the infrastructure components. DCIM software also offers visualization tools to help better understand energy consumption. Other DCIM systems have cooling management modules to correlate energy and cooling data. DCIM can also be used with external cooling management systems.

Moreover, according to the latest ASHRAE TC 9.9 Guidelines, 27°C (80°F) is still a “safe” temperature for cooling equipment in the cold aisle. An intake temperature up to 32°C (90°F) is still in the A1 allowable guidelines. A 27°C (80°F) temperature may be hotter than the standard 21°C (70°F). But this is not as bad for the servers.

Consider taking temperature measurements inside the face of the cabinet. It is the location where servers take in cool air. It is also the essential and only valid measurement.

The highest temperature is typically located at the top of the rack. While the coolest temperature is typically at the bottom. You may rearrange the servers closer to the bottom of the racks. Do not forget to use blanking panels to block open spaces in front of the racks. This will stop the hot air from re-circulating into the front racks. There is no need to worry if the

rear temperatures reach 37°C (100°F).

Eliminate Hot Spots in Data Centers

Photo Credit: www.opticooltechnologies.com

Hot spots in data centers are generally caused by airflow issues. Wherein the cool air does not reach its target IT equipment. To prevent it, the air must be spread evenly and redistribute heat loads into every rack. Monitor the temperature in the bottom, middle and top racks by installing temperature sensors. Place these temperature sensors in each rack, three sensors per rack.

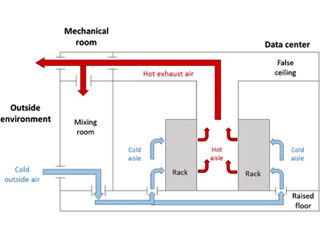

Consider placing in-row cooling units directly within a row of cabinets and racks. A cooling unit can be placed hanging from the ceiling or you can place it on top of a cabinet or mount it. In-row cooling systems provide a shorter airflow path. The cool air goes to IT equipment immediately. The cooling capacity in data centers is utilized to disperse heat.

Aisle containment with an in-row cooling system is one of the most efficient strategies in data center cooling. Aisle containment systems stop the mixing of cool air intake and hot exhaust air. In-row cooling systems are generally utilized with cold aisle containment. Here, the cold aisle is surrounded to make a closed-loop design. It directs the cool air onto the equipment.

Optimize Data Center Design

Photo Credit: pinimg.com

If your data center has a raised floor, place the hottest racks in front of the perforated tiles. You may also change to other perforated tiles to match the airflow to the heat load. Do not place CRAC/CRAH units close to the perforated tiles. This will lead to a “short circuit” of air. The cool air will flow back immediately into CRAC/CRAH units. Hence, taking sufficient supply of cool air to the facility.

Make sure to avoid bypass airflow. Cable openings on the floor permit air to escape the raised floor. This leads to the air going to areas where it is not needed. It decreases the supply of cold air to the floor vents in the cold aisle. Survey the raised floor for openings inside the cabinets.

Survey the rear area of racks as well. See if there are cables blocking the exhaust airflow. Unreleased exhaust air causes too much back pressure to the IT equipment fans. Consequently, causing the equipment to overheat. If possible, use shorter network cables. Unclutter cables at the rear area of racks to avoid impeding the airflow.

Make sure to avoid recirculation. The hot air exhausted from the rear of cabinets can directly flow back to the CRAC/CRAH unit without mixing with the cold air. If your data center has a plenum ceiling, utilize this feature to take warm air. Apply a ducted collar leading into the ceiling from the CRAC/CRAH return air intake. Generally, a simple duct system will provide an immediate effect on the room temperature. Note that the warmer the return air, the higher efficiency and cooling capacity of CRAC/CRAH.

Bonus Energy Efficiency Tips

Make sure to turn off the lights when the room is unoccupied. This saves 1% to 3% of the heat and electrical load. In turn, it decreases the room temperature from 1°C to 2°C.

Unplug any equipment unnecessary to data center operations.

You may consider installing temporary roll-in cooling units. Only if the heat can be exhausted into an external area. Exhaust ducts of roll-in units must have an exhaust leading to an outside area in a controlled space.

AKCP Monitoring Solutions

AKCP provides a DCIM solution perfect for your data center. AKCPro Server integrates all your environmental, security, power, access control, and video in a single, easy-to-use software.

Environmental Monitoring

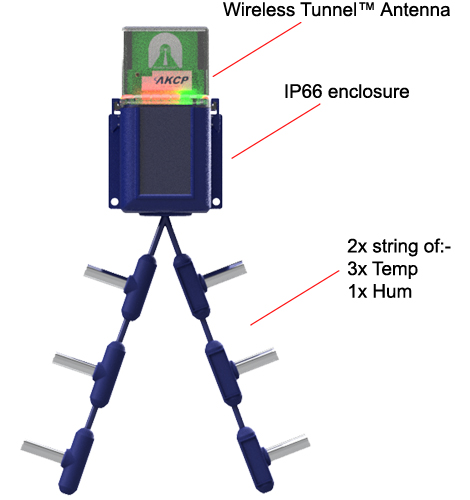

Wireless Thermal Mapping of IT Cabinets

Monitor all your temperature, humidity, airflow, water leak to secure your data center’s environmental status. Configure rack maps to show the thermal properties of your computer cabinet. Check the temperature at the top, middle, bottom, front, and rear. As well as temperature differentials.

-

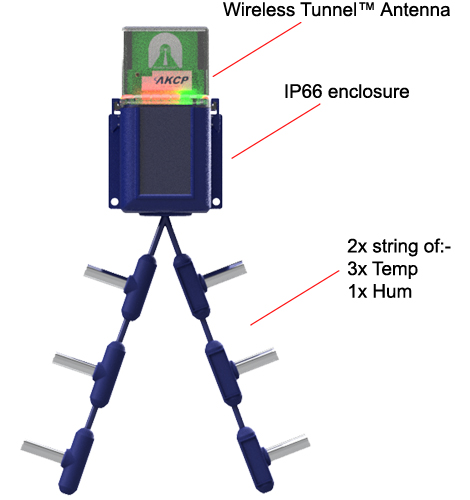

Wireless Thermal Mapping of IT Cabinets

Wireless thermal mapping of your IT cabinets. With 3x Temperature sensors at the front and 3x at the rear, it monitors airflow intake and exhaust temperatures, as well as providing the temperature differential between the front and rear of the cabinet (ΔT) Wireless Thermal maps work with all Wireless Tunnel™ Gateways.

Thermal Maps are integrated with AKCPro Server DCIM software in our cabinet rack map view. For more details on the cabinet, thermal map sensors view here.

Power Monitoring

Monitor single-phase, three-phase, generators, and UPS battery backup power. AKCP Pro Server performs live (PUE) calculations. So you have a complete overview of your power train. As well as how adjustments in your data center directly impact your PUE.

AKCP offers a wide range of solutions for all your data center needs. With over 30 years of experience in professional sensor solutions. AKCP created the market for networked temperature, environmental, and power monitoring in data centers. AKCP is the world’s oldest and largest manufacturer of network wired and wireless sensor solutions. For more details, visit AKCP at akcp.com.

Conclusion

Be sure that your cooling system is properly maintained. Note the importance of cleaning all exterior rejection systems. There is no perfect solution to fix cooling system issues. Especially when the heat load exceeds the cooling system’s capacity. However, improving airflow increases efficiency from 5% up to 20% is a big help. Summer is just around the corner. Efficient airflow systems will help you get through the hottest days.

These basic cooling tips can be the saving grace of your data center operation. Assuring a well manage data center as well as delivering cost-efficient results.

Install basic remote temperature monitors inside cabinets or racks. Be sure to set an alarm threshold for early warning systems of problems that may arise. Having a fallback plan is also beneficial. Shut down the least vital systems so that more vital systems can still operate. Place delicate systems in the coolest areas of data centers.

Reference Links:

https://gcn.com/articles/2019/01/04/data-center-cooling.aspx

https://www.datacenterdynamics.com/en/opinions/top-summer-cooling-tips-your-data-center/

https://tc0909.ashraetcs.org/documents/ASHRAE%20Networking%20Thermal%20Guidelines.pdf

https://www.akcp.com/blog/optimizing-data-center-cooling/