Cooling a Data Center

Computer room air conditioner (CRAC) or computer room air handler (CRAH) infrastructure is used by traditional data center cooling technology. This combined cooling infrastructure pushes cold air through the perforated tiles and into the server intakes. Once the cold air passed over the server’s components and vented out as hot exhaust, that air would be returned for cooling. This is why return temperatures from CRAC and CRAH units are set to be the main control point of a data center floor.

Inefficiency and lack of any controls are the main concern of this deployment. Cold air is simply vented into the server room. This might be an effective strategy with the low-density deployment but not with the higher-density data floors. This is where the cold aisle and hot aisle containment concepts came from. Separating the two air maximized efficiency. In this strategy, cold air remains cold and hot air will be directed to air handlers without rising temperatures of the environment.

New data centers today have adopted many innovative cooling technologies for efficient operations. These strategies run the gamut to complex heat transfer technologies. Free cooling, liquid immersion, and many more just to save more energy.

CRAC and CRAH Differences

Conventional AC and a CRAC have differences from each other. They both use compressors to keep refrigerants cold. They operate by blowing air over a cooling coil that’s filled with refrigerant. Most of the time conventional AC is inefficient as they run at a constant level whereas a CRAC has precision cooling controls.

CRAH uses a chilled water plant that supplies the cooling coil with cold water. They will use this cold water to cool down the air.

CRAH and

CRAC have the same operating principle aside from the use of compressor of the latter. This also means CRAH uses less energy than CRAC.

Recommended Temperature of a Data Center

Take note that this is only the recommended temperature for the actual server and not the server room. The dynamics of heat transfer will tend to make the air around the servers warmer. The high-density servers. in particular, produces a huge amount of heat. That makes the ambient temperature of the server room colder down from 19°C to 21°C.

It is remarkable that data centers like those by big companies like Google are running at higher temperatures. It is because of their software backups for “expected failure”. This software anticipates servers will fail on a regular basis and routes around the failed servers. This strategy could be effective for Google as it is a large enterprise and probably runs hundreds and thousands of servers. However, the same thing cannot be said with smaller organizations.

Data Center Cooling Market Growth Projections

Photo Credit: cdn.gminsights.co

A market projection from ResearchandMarkets.com indicates that there will be an average of 3% between 2020 and 2025. This only proves that the data center cooling market has an ongoing interest.

Furthermore, a Market Study report published different yet similar findings in December 2017. This study also indicates a growth related to technology.

The trend of data centers built in third-world countries is rising. And that’s one of the biggest factors of this anticipated growth. Analysts believe that the facilities’ efficiency will be emphasized once data centers start their major operations in these countries. This, naturally, triggered the need for more innovative cooling technologies such as liquid cooling.

Misconceptions in Data Center Cooling

Maximizing the efficiency of cooling equipment is crucial in controlling and lowering cooling costs and energy usage. Here are some mistakes data center operators need to avoid.

1. Bad Cabinet Layout

Not planning the arrangement of your data center cabinet is a big mistake. Arranging cabinets without a systematic design is equal to a waste of money and resources. An ideal cabinet layout should include a hot aisle and cold aisle containment design. In this design, a CRAH should be at the end of each row to gather the exhaust hot air and return it as cool air.

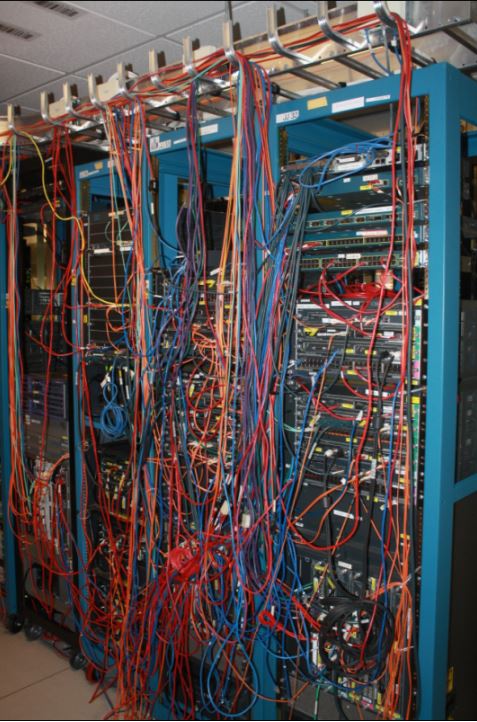

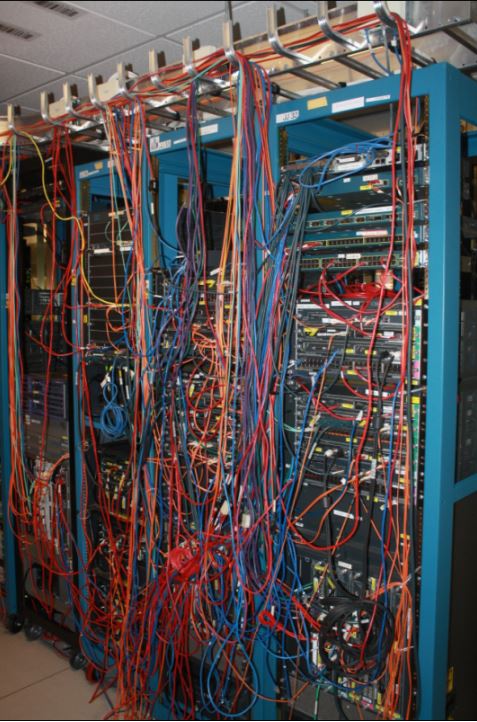

Photo Credit: www.cisco.com

2. Empty Cabinet

You probably think that these empty cabinets are harmless and won’t affect your data center cooling plans. These empty cabinets could encourage the return of hot exhaust air in the cold aisle. If it is inevitable for your data center to have an empty cabinet, make sure that the cold aisle is contained well.

3. Empty Spaces Between Equipment

The most overlooked factor is the spaces between hardware are the tricky culprits that can ruin your airflow management. If the spaces in the cabinet are not sealed hot can air can easily leak back into the cold aisle. As a well-versed operator, you might want to fix and make sure that these spaces are sealed. This as small as it can be, you can probably save energy from this and optimize your data center cooling system more.

4. Raised Floor Leaks

Raised floor leaks occur when cold air leaks under your raised floor and into support columns or adjacent spaces. These leaks can cause a loss of pressure, which can allow dust, humidity, and warm air to enter your cold aisle environment. In order to resolve phantom leaks, someone will need to do a full inspection of the support columns and perimeter and seal any leaks they find.

5. Leaks Around Cable Openings

Just because they are under the floors and are mostly unnoticeable does not mean they don’t have any effects. Make sure to have a regular check-up for unsealed cable openings, holes under remote power panels, and power distribution units. These openings in floors and cabinets may cause cold air to escape if left unattended. Always take note, data center cooling is expensive, therefore, even a small amount of unutilized air is a waste of valuable resources.

6. Multiple Air Handlers Fighting to Control Humidity

A huge amount of energy is wasted for air handlers that dehumidify the air and another humidifies it at the same time. Be sure to have thoroughly planned humidity control points to reduce the risk of this incident.

AKCP Monitoring Solutions

To prevent hardware failure and to function at its optimal data centers must stay within ASHRAE recommended temperature and humidity ranges. Now more than ever, data center temperatures, cooling, and environmental control become much more important. If any piece of your data center cooling technology were to fail, it could result in a lot of damage within the data center. Not only that but also expensive repairs and replacements. In order to ensure that your data center cooling technology is running at its optimal capacity, monitor the temperature, humidity, and airflow,

Of course, monitoring your data center can be hard. You’ll have to check airflow both below the floor and above. The temp will shift between hot and cold spots, and humidity can change from your return airspace to the data center floor. As hard as it may be, you can’t simply leave your data center’s environment unmonitored or trust them to the single systems that control that environment. If the environmental control device and reporting device are one and the same they will fail together.

The AKCP Pro airflow sensor is designed for systems that generate heat in the course of their operation and a steady flow of air is necessary to dissipate this heat generated. System reliability and safety could be jeopardized if this cooling airflow stops.

The Airflow sensor is placed in the path of the air stream, where the user can monitor the status of the flowing air. The airflow sensor is not a precision measuring instrument. This device is meant to measure the presence or the absence of airflow.

In addition to the sensor’s ON or OFF status, the airflow sensor’s condition can also be read via an SNMP get using its OID. SNMP traps sent when critical. S

AKCP Single Port Temperature Sensor

NMP polling via getting available. Web browser interface available. When an alarm condition is activated the description and location of the fault can be sent via email, page.

In situations where both temperature and humidity can be critical, you can keep up to speed on the current conditions using this sensor. Combining temperature and humidity into one sensor frees up an additional intelligent sensor port on your base unit.

- Temperature and Humidity Monitoring on a single sensor port

- Own SNMP OID for data collection via a network

- Temperature measurement from -40ºC – +75ºC

- Relative Humidity measurement from 0% – 100%

- Powered by the base unit, no additional power needed

All you need to do is set your thresholds for low and high warning and critical parameters. The built-in graphing function of the base unit gives you a pattern of temperature or humidity trends over time.

AKCP Thermal Map Sensor

Datacenter monitoring with thermal map sensors helps identify and eliminate hotspots in your cabinets by identifying areas where temperature differential between front and rear are too high. Thermal maps consist of a string of 6 temperature sensors and an optional 2 humidity sensors. Pre-wired to be easily installed in your cabinet, they are placed at the top, middle, and bottom – front and rear of the cabinet. This configuration of sensors monitors the air intake and exhaust temperatures of your cabinet, as well as the temperature differential from the front to the rear. Use thermal map sensors to identify cabinet hot spots and problem areas.

Advantages Of Thermal Map Sensors

- Obstructions within the cabinet

- Server and cooling fan failures

- Insufficient pressure differential to pull air through the cabinet

- Power Usage Effectiveness (PUE)

sensorProbeX+

SensorProbex+ Base Unit

AKCP designed

sensorProbeX+is a metal, rack-mountable can connect to up to 80 virtual sensors. Sensors will measure the temperature, humidity, airflow, smoke, or any other conditions you may be worried about in your server room. A wide range of AKCP Wired sensors are compatible with

SPX+ and it comes with omes in several standard configurations, or can be customized by choosing from a variety of modules such as dry contact inputs, IO’s, internal modem, analog to digital converters, internal UPS and additional sensor ports.

Setup is simple with the

sensorProbe,

sensorProbe+ and

securityProbe autosense function. Once sensors are plugged into the intelligent sensor port the base unit automatically detects the presence of the sensor and configures it for you.

Data Center Cooling at its Best

Tons and tons of new cooling data center cooling technology arise as the growth in IoT and datacenter continues. More power is also needed to operate and facilities and equipment to their peak capacity.

Having the best and solid data center cooling technologies also assure clients. It also attracts new clients to make long-term relationships with the data center. Difficulties associated with migrating assets and data, finding a data center partner with the power and data center cooling technology to accommodate both present and future needs provide a strategic advantage for a growing organization.

Reference Links:

https://journal.uptimeinstitute.com/a-look-at-data-center-cooling-technologies/

https://www.digitalrealty.com/data-center-cooling

https://www.vertiv.com/en-asia/about/news-and-insights/articles/educational-articles/a-beginners-guide-to-data-center-cooling-systems/

https://datacenterfrontier.com/history-future-data-center-cooling/